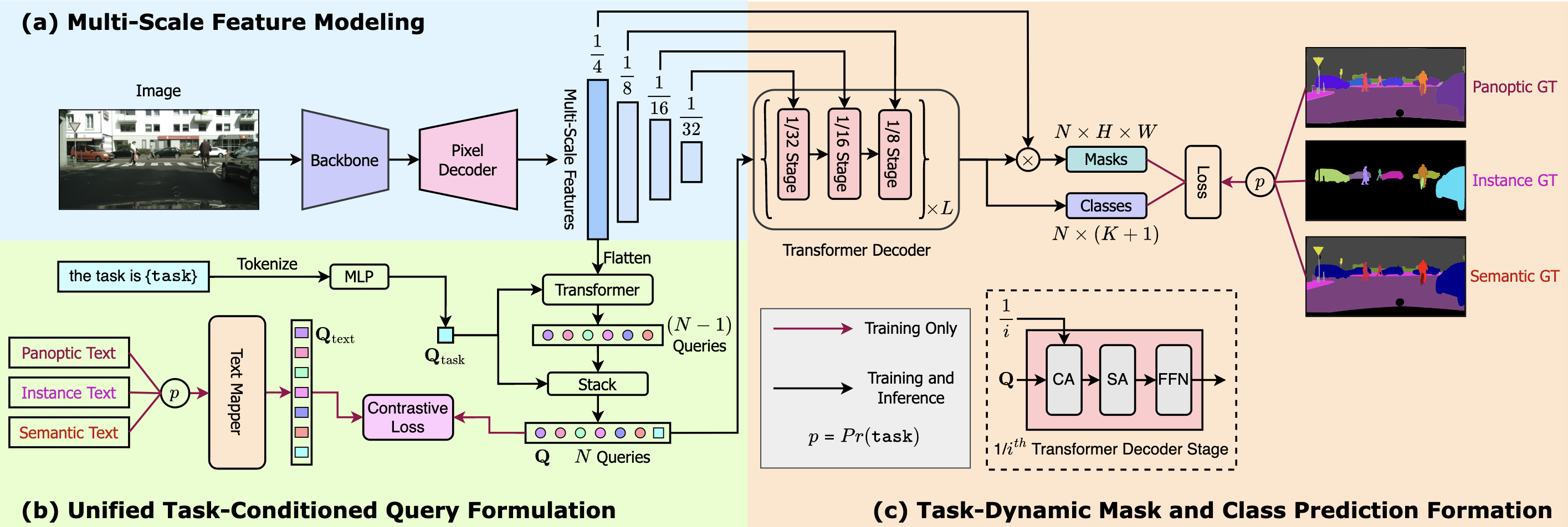

In the field of Computer Vision, Image Segmentation is a crucial area that involves dividing images into segments to simplify or change the representation of an image into something more meaningful and easier to analyze. This blog dives into several prominent architectures that play a vital role in the development of image segmentation technologies. U-Net Based Originally designed for medical image segmentation, the U-Net architecture is notable for its effective use of data through the use of a symmetric encoder-decoder structure. Architecture U-Net is designed with: An encoder path to capture context and a decoder path to enable precise localization. Skip connections that help recover spatial information lost during downsampling. Metrics/Loss The performance of U-Net is often evaluated using the Intersection over Union (IoU) and Dice Coefficient metrics. Common loss functions include: Cross-Entropy Loss for binary classification. Dice Loss, particularly useful for data with imbalanced foreground and background. Transformer Based Transformers have recently been adapted for the task of image segmentation, leveraging their ability to handle global dependencies effectively. Architecture Transformer models for segmentation like SETR or SegFormer integrate the transformer's self-attention mechanism to model long-range dependencies across the image. These models often feature: A transformer encoder to process the image as a sequence of patches. A decoder that reconstructs the segmentation map from the encoded features. Metrics/Loss For transformer-based models, standard segmentation metrics such as IoU and the Dice coefficient are used. Loss functions typically include: Cross-Entropy Loss, calculated on a per-pixel basis. Focal Loss, designed to address class imbalance by focusing on harder examples. DeepLab DeepLab is a series of models that excel at semantic segmentation using atrous convolutions to capture multi-scale information without losing resolution. Architecture DeepLab architectures utilize: Atrous Spatial Pyramid Pooling (ASPP) to robustly segment objects at multiple scales. Encoder-decoder structure with depthwise separable convolutions to optimize computation. Metrics/Loss DeepLab models leverage pixel accuracy and mean IoU for performance measurement. Losses include: Softmax cross-entropy loss for classifying each pixel. L1 or L2 losses if depth prediction is integrated into the task. Each of these architectures offers unique advantages in handling the complexities of image segmentation, making them suitable for a variety of applications from medical imaging to autonomous driving.

Read more...

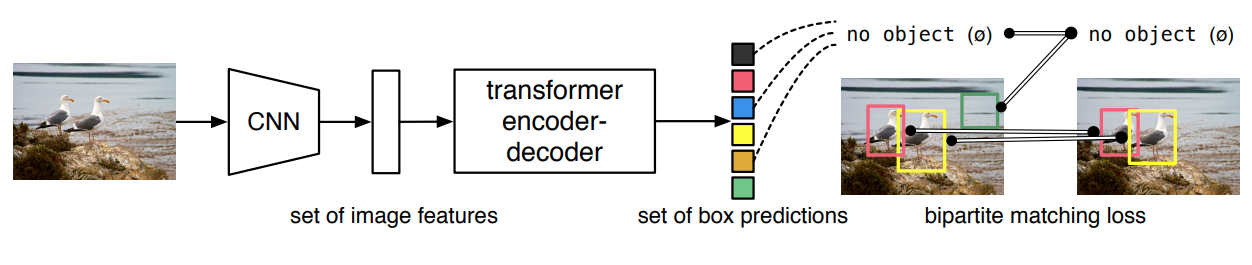

In Computer Vision, Object Detection is very essential problem. We had a lot of architecture: Dense, CNN, Transformer.... Today, we'll deep dive into it. Transformer Based Anchor-based approach of Yolo can improve speed, but we need NMS and some post process for noise filer It's waste time in tuning. So we can use Transformer architecture for end to end training and inference. Architecture Output: The classification logits for each query, which predict the probability of each class for the detected objects, including a "no-object" class The coordinates of the predicted bounding boxes normalized to the size of the image aux_outputs: Additional outputs from each transformer decoder layer, used when auxiliary losses are activated MLP (Multi-Layer Perceptron): A simple fully connected network used within the DETR model to process features (e.g., to compute bounding box coordinates). QKV: Q: using embedding to represent objects K and V: projected to KV by linear layers. Key for correlations between query and position in image. V offer info to update status of query in loop of transformer. Metrics/Loss SetCriterion: This class calculates several losses for training the DETR model. It uses: A Hungarian matcher to associate predicted boxes and classes with ground truth boxes and classes. Loss calculations for classification (loss_ce), bounding box regression (loss_bbox), GIoU (loss_giou), and optionally, mask losses (loss_mask, loss_dice) if segmentation masks are being predicted. Cardinality loss (loss_cardinality) which measures the error in the number of predicted objects versus the actual number of objects. CNN Architecture Using Convol technique with kernel for scaning, and then, learning feature from images. In Yolo, we have Anchor-based technique to stable learning. Model classify anchor box and minimize IoU. Bottle-neck block Spatial Pyramid Pooling - Fast Spatial Pyramid Pooling - Fast Spatial Pyramid Pooling - Fast SiLU Batch Normalization Max Pooling layers Assorted hyperparameters IOU thresholds và loss functions Attention Mechanism Data Augumentation Using Mosaic Mixup Augumentation to avoid overfiting and improve accuracy in test. Loss Varifocal loss BCE loss CE loss Bbox loss RotatedBboxLoss KeypointLoss Initial technique Using weight init to set bias conv detect = -1 to almost anchor output will overlapse with groundtruth. Set bias conv classify = -3 to accuracy from start, is background, only learn positive Mask R-CNN/Faster R-CNN Architecture DETR and Faster R-CNN have the same params. Faster R-CNN better mAP than DETR in small object. But normal object is better. R is Region Proposal Network, and then, Classify and regression. Mask is a segmentation to offer RoIPool. We need to fine tune Mask in 1st phase. After that, Object Det fine-tuning Using Feature Pyramid Networks (FPNs). Loss Classification Loss: BCE or CE. (multilabel or unilabel) Mask Loss: Binary Cross-Entropy for every pixel. BBox Loss: Smooth L1 Loss (regression loss)

Read more...

Many organisations are now facing a challenge when it comes to choosing and setting up the right messaging infrastructure. It must be able to scale and handle massive parallel connections. This challenge often emerges with IoT & Big Data projects where a massive number of sensors are potentially connected to produce messages that need to be processed and stored. This post explains how to address this challenge using the concept of network brokers in JBoss Active MQ.

Read more...

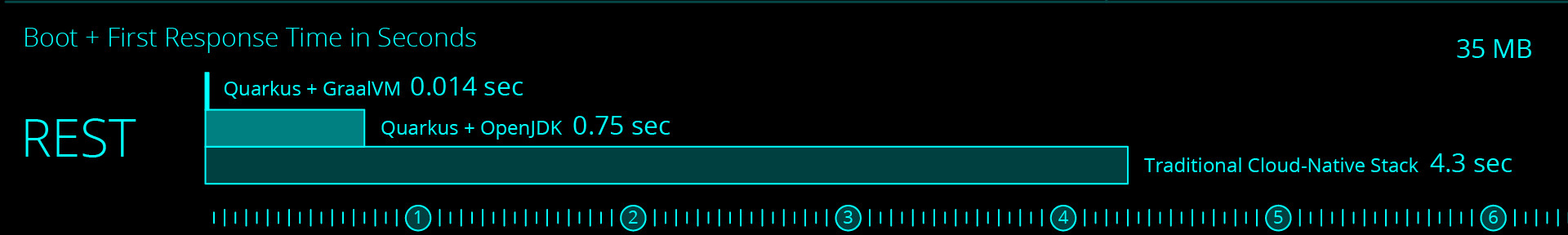

When using Apache Camel with Quarkus as of today, we are limited number of Camel connectors. One important one being the Apache Kafka connector. The Kafka connector provided through the Smallrye Kafka Extension is available for Quarkus though. So in this article, I will show how to wire Smallrye Kafka connector and Camel together. We will also be using the camel-servlet component to reuse the undertow http endpoint provided by Quarkus and to spice things up a little we will use the XML DSL of Camel. All of this will be natively compiled in the end.

Read more...

On March 07, 2019 Red Hat & the JBoss Community announced Quarkus. It is a Java framework that enables ultra low boot times and tiny memory footprint for applications and services. It does that by taking advantage of GraalVM's native compilation capabilities to produce an executable out of your Java code. Why is this considered to be a game changer ? In this article I will give my view and first impressions on this framework as someone who spends a bit of time writing applications and services. I will get my hands dirty by building a working Quarkus + Apache Camel example.

Read more...

Openshift is a PaaS solution based on Docker and Kubernetes. This article will show you how to install Openshift Origin in less than 5 minutes and deploy your first Java application to it.

Read more...

Building Integration and Services platform with JBoss Fuse is great. It is even better when you add a distributed in memory data base solution such as JBoss Data Grid to the mix. This article will show how to make both technologies work together using the camel-jbossdatagrid component. We will go through the setup of a JBoss Data Grid server with persistence and see how to use it in a JBoss Fuse application through the remote Hot Rod client. Furthermore we will see how to take advantage of Protocol buffers and Lucene to index data and perform content based queries.

Read more...

Openshift offers a whole pipeline to create container images directly from source code. It is usable for general purpose Java applications as well as for Fuse Integration Service projects. This post will present how to accelerate the build time of those images by setting up a local Nexus Repository and configuring application templates to use this repository for the build process. The idea is not having to download from the Internet all the FIS maven dependencies when building a new project. At the time of writing FIS 2.0 is available as tech preview.

Read more...

Service and api platforms are mostly real time oriented and handle small amounts of data that can be easily processed in memory. But for many legacy purposes and for content management systems, being able to handle large sets of data is a very common requirement. This article shows how to easily send and receive large data files through HTTP with JBoss Fuse using streams. The main objective of being able to use streams is to avoid running into out of memory issues.

Read more...

Most integration/service platform projects start small but need to ensure high availability requirements and be scalable to handle growing workloads. This article shows how to use Ansible to automatically provision servers with JBoss Fuse and JBoss ActiveMQ instances to get a highly available service and messaging platform.

Read more...